Increasing Accessibility to AI

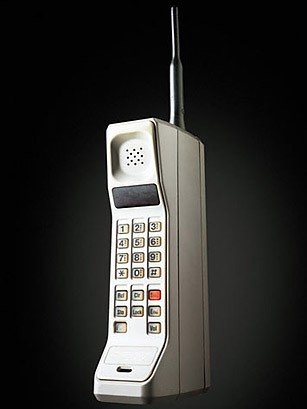

The first cell phone ever sold was called the DynaTAC 8000X. The year was 1983 and for the low, low price of $3995, you could have been an early adopter. The phone itself took ten hours to charge and had a battery life of half an hour. It was a large, unwieldy thing that had that peculiar look of what people in the 80s thought the future would look like. Almost nobody bought one.

This was once the “future”

Today, however, it’s a near certainty that as you read this, your cell phone is either within arm’s reach or being charged nearby. What began as a boutique item for hardcore technologists, nerds, and people with, shall we say, plenty of disposable income is now ubiquitous. Cell phones have supplanted landlines for much of the world’s population even as they’ve grown exponentially in complexity. In the way a parrot resembles its dinosaur ancestors, the smart phones of today bear just a passing resemblance to the DynaTAC 8000X.

Largely, this is how technologies get adopted. Early versions are cumbersome and unreliable. As the technology increases in popularity, it becomes sleeker, more refined, and more dependable. And while the simple march of time has something to do with this, popularity is an incredibly important component as well. After all, what’s the last time you saw a pair of Google Glasses in the wild?

Artificial intelligence is following a similar trajectory. We’ve weathered a pair of AI Winters — times when business and government lost faith in the technology because it was overhyped or because training models took far too many resources — but things have changed. AI adoption is growing and it’s growing quickly. The issue is where it’s growing: almost solely in affluent countries and skewed towards the most successful companies in those countries.

It doesn’t have to be that way.

In our last piece, we talked about the impact of AI and making sure that our work both increases the common good and uses less resources to do it. But creating responsible AI doesn’t just mean creating responsible outcomes. It means creating widespread accessibility. Here’s how we can do it:

Democratizing costs

A few years back, one of my colleagues worked at a well-funded AI startup trying to solve some complex challenges. Their strategy was to distribute models across hundreds, if not thousands of machines globally. Specifically, they rented those machines from a variety of research institutions, gaming cafes, and so on. Things were going well enough but something happened: Etherium.

Suddenly, the price for those compute resources went up. Cryptominers were outbidding the company and, because they were getting immediate return on their investment, the startup couldn’t really compete. Research slowed down because of simple supply and demand.

If you’ll remember our last piece, we talked about how expensive, both environmentally and monetarily, training models have become. If only the most successful companies can really afford to chase the most robust solutions, we’re in danger of AI monopolies, not AI democratization.

This isn’t just something we’ve noticed. Recently, the Stanford Institute for Human-Centered Artificial Intelligence spearheaded a project with a goal of increasing access to the compute resources usually dominated by the biggest tech giants. It’s a really hopeful step towards making these resources available to universities where the next generation of computer scientists is learning how to create the next generation of AI.

That project is just a drop in a pretty big bucket but it’s a positive step. We need to prevent a world where only the biggest companies can afford to work on the biggest research projects. Because that leads to a future where investment in the field will dry up and AI works only for the affluent and not for the greater good.

Democratizing our partnerships

If we want to increase accessibility to AI, we need to be active in searching out partners who share our values.

Take something like data labeling for example. Big labeling houses produce more labels more quickly if they’re working for larger companies. That’s because those companies pay for that privilege. Their labeling tasks may pay better than smaller companies can afford which means labelers do their tasks first. You can’t blame the labelers for that either! If you were given two options with nearly identical work, chances are you’d choose the one you were being paid more for.

But not every labeling company functions that way. Companies like iMerit and Daivergent provide best-in-class labels while giving work to underrepresented populations. Because these companies actively manage their workforces (instead of a more open crowd model other shops use), they’re ideal for tasks where data must be as accurately labeled as possible and where there isn’t a need for, say, millions upon millions of labels. Then there’s the ethical component: you’re providing meaningful work for people who are often ignored in the tech ecosystem (and often society).

Nothing increases accessibility to AI like partnering with companies that are actively trying to do just that.

Hiring by work product, not diploma

The best AI programs in America aren’t exactly at the most affordable schools. Carnegie Mellon, MIT, and Stanford make up the top three and, while the education you’d receive there is surely quite good, it’s something that’s simply out of reach for most people in the world.

But great computer scientists don’t just come from great universities. With the prevalence of online learning in ML from places like Udemy, Coursera, Udacity, and more, people are bootstrapping their AI education from the comfort of their own home. And with the COVID pandemic raging, these programs figure to grow in popularity as less and less students are willing to shell out massive tuition costs to stare at Zoom all day.

Here we’d like to point out that these courses in and of themselves are increasing access to AI. Full stop. Being able to learn everything from rudiments to some really technical concepts online for a fraction of the cost is such a boon for the industry. More people getting interested and learning how to run machine learning projects means more varieties of approaches, more hours spent on big problems, and a bigger variety of practitioners than we have today. AI as a subject is so much more accessible than biochemistry because all you really need is a computer, some training, and some patience.

For businesses, we need to do our part too. Instead of judging prospective hires on their university, judge them by their code, their problem solving, their CV. There are incredibly smart people at places like MIT and Stanford. But they don’t have a monopoly on the next generation of machine learning professionals.

Reducing the barrier to entry at every level

Overall, this is what it’s really about. AI shouldn’t be something we reserve for just the few people who can currently afford to employ it or study it. If you believe AI has the promise that we believe it has, making it unaffordable and working only with big providers and hiring only from big schools is actively working against that promise. Increase access will bring increased success to every part of the discipline. It’s just that simple.

Think back to the example we started this piece with: cell phones. Where once, only the most free-spending business man could afford a $4000 phone, today phones allow people in far flung locales access to not only a phone but maps, news, social media, and even a mediocre video game to unwind with. Democratizing access to mobile phones has made us more connected to each other and unlocked opportunities that just couldn’t exist without them. AI can be next.

And with online learning gaining popularity, projects like the Stanford one increasing access to serious compute power, and a preponderance of potential partners for your next project, accessibility to AI is increasing. It’s our responsibility in the industry to make sure that momentum doesn’t stagnate.